Published On Aug 5, 2024

Here we dive into Unlimiformer with lead author Amanda Bertsch herself! Amanda gives us a presentation on Unlimiformer and Long Context Models as well as answer the several questions our divers had. If you want to ask questions yourself the next time we have an author on…click the link👇

Join Arxiv Dives 🤿 https://oxen.ai/community

--

Use Oxen AI 🐂 https://oxen.ai

Oxen AI makes versioning your datasets as easy as versioning your code! Even is millions of unstructured images, the tool quickly handles any type of data so you can build cutting-edge AI.

--

Paper 📜 https://arxiv.org/abs/2305.01625

Links + Notes 📝 https://www.oxen.ai/blog/arxiv-dives

Join Arxiv Dives 🤿 https://oxen.ai/community

Discord 🗿 / discord

--

Chapters

0:00 Intro

2:25 How Do you Do Book Summarization?

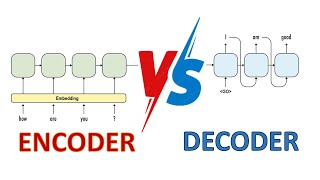

4:51 Unlimiformer Encoder Decoder

6:55 Questions

9:25 How Does Unlimiformer Choose What To Retrieve?

12:12 Mapping Context Window

13:42 Questions

24:26 Higher Cost Training

26:35 How Do We Train Unlimiformer

28:36 Results

34:22 EntMent

37:00 Computational Cost

38:50 Long Context Attention as Embedding Retrieval

39:39 How Do You Adapt This For Decoder Only Models